First 3 Months as a DevOps Engineer; 2024 Q2 Retrospective

Table of Contents

My first Retrospect #

To conclude upfront, I can confidently say that over the past 3 months, I’ve been sitting in front of a computer except for eating and sleeping. But I enjoyed every moment of it.

This is actually my first retrospective. I’ve been writing daily journals since high school, and I’ve been keeping work logs in Markdown on Obsidian every day since March this year. While I’ve habitually kept records, I don’t think I’ve had many opportunities to seriously reflect on myself like this. Of course, this too is more meaningful as a record.

After internships as a developer, being part of a startup team, and returning to university last year, I was a student and research lab intern before officially starting work at a new company in March. So naturally, there’s a plenty of content to write about in this retrospective. While there were certainly many other events besides what I mention in this post, I don’t want to put too much effort into the retrospective itself, as it might make it difficult to continue writing regularly. So for now, I’ll be satisfied with this much of amount.

First 3 Months as a DevOps Engineer #

I’ve dreamed of the DevOps engineer position for quite a long time. When I first learned development after entering university, I started with frontend, then moved to backend, experiencing a wide variety of tech stacks (probably partly because I enjoy using new languages and frameworks as a hobby). However, the more I developed, the more interested I became in 1) networks and distributed systems, and 2) infrastructure. And while I was in the perhaps undeserved position of tech lead in a startup team, I naturally became interested in the term DevOps while focusing on CI/CD pipelines and infrastructure configuration.

Early this year, I was looking for a job to fulfill my military service as an industrial technical personnel. However, due to the nature of work, I judged that the DevOps engineer position required a lot of know-how and experience. Even though, I’ve experienced programming in various ways, I still lacked the practical experience in large scale real-world applications to handle such a position. Nevertheless, my desire to do work that I could genuinely enjoy and immerse myself in for at least 2 years at the company I would be part of seemed to be stronger. So I actually spent a long time deliberating whether to apply as a backend engineer or search for a job as a DevOps engineer.

After much consideration, on March 4th, I was able to start my first day as a DevOps engineer at an awesome company called Channel Talk. I won’t go into detail about my journey to joining Channel Talk in this post. Because this post is literally about looking back on what happened during Q2, from March to June.

Since I’m cautious about writing too much detail about company work, I’ll be just briefly list what I’ve been doing for this time.

1) Setting up and managing development servers #

One of the typical R&Rs of the DevOps team is, of course, infrastructure management. This includes development servers as well as the production stage. Channel Talk currently manages most of its infrastructure with EKS and Terraform. I had experience with both, so there wasn’t much difficulty in adapting. However, managing these at a real service level was a very rare experience.

Even when setting up development servers, there are cases where related elements are needed, not just a single server. Examples could be DB, logging, or other servers with dependencies. If frontend and backend developers need new development environments and servers for new feature development, it’s also one of the roles of the DevOps team to set these up. So, there are often cases where I spend more time handling Terraform (HCL) and Yaml for K8s than actually coding.

However, it was a valuable experience that made me think a lot about AWS services I hadn’t used before and cost optimization, which I didn’t need to consider when developing alone.

2) Optimizing CircleCI and Docker base images #

Like many tech companies today, most services in our company are built and operated as docker containers. In our case, we build and use our own base images rather than directly using public images from docker.io.

I was in charge of tasks such as removing 1) CVE vulnerabilities in these Docker base images or 2) removing the buildx(QEMU emulator), which is slower in docker build compared to native machines. At that time, I experienced CircleCI, the main CI pipeline we were using, and while modifying numerous CVE vulnerabilities and Dockerfiles, I was also able to study core libraries of various Linux distributions (e.g., glibc).

3) ARC; Github Actions as Kubernetes self-hosted runner #

Github: https://github.com/actions/actions-runner-controller

While we were using CircleCI, we’re now attempting to move one by one to Github Actions. And when doing this, we wanted to use self-hosted runners instead of Github-hosted runners, and I was in charge of researching and proceeding with this.

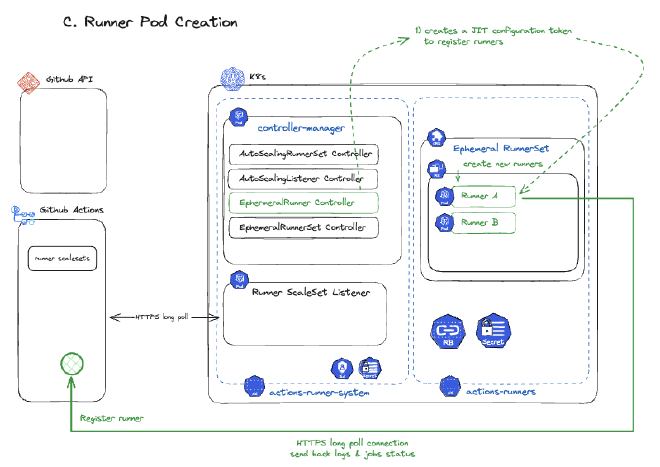

Github itself already supports a controller that provides integration with Kubernetes for Github Action self-hosted runners. The diagram below is one that I drew myself during the PoC and research stage before using this.

privileged containers. I also looked into numerous methods of building containers without a Docker daemon. In particular, I spent a lot of time on Kaniko, and I hope I can introduce the content I researched about this in another post.

4) Maintaining the internal deployment system #

Currently, Channel Talk has a separate internal deployment system. And this is mainly managed by the DevOps team. From my perspective, the current DevOps team at our company seems to be in charge of platform engineering as well. (If you’re curious about platform engineering, you might want to read this post from Kakao Pay explaining the concept). You can read about the internal deployment system originally created by Channel Talk on Channel Talk’s tech blog. Although there are some slight differences in Channel Talk’s deployment system today compared to that blog.

Today, the internal deployment system is operated by a Kubernetes operator created by the team. So, it was really valuable experience to see and work with Kubernetes operator code, which is quite different from the REST API servers I developed when doing backend development.

Weekend As a Solo Developer #

From here on, it’s my personal story unrelated to company work. Of course, like the previous content, almost everything is about computers 🤓. On weekdays, I focus on company work during work hours, and after work, I spend about 30 minutes to 1 hour 30 minutes developing as a hobby. And from Friday evening to the weekend, I develop until dawn, which is my current lifestyle.

Building a Mem. 120GB Kubernetes cluster in my room #

This is the current scene of my room where I’m living. The LEDs are so bright that it’s difficult to fall asleep without an eye mask if I don’t have a way to turn them off immediately. The computers currently composing my home network are 1) 2 desktops, 2) 1 MacBook Air, 3) 1 Synology NAS.

The specs of the on-premise Kubernetes cluster I’ve set up in my room currently total 48 CPU cores, 120GB of Memory, and 16TB of Storage.

How am I using this massive cluster? I’ll explain that later when I get the chance. There were many challenges and learning points while setting up the home server. In particular, all the computers you see in the picture now have different operating systems installed. From right to left, they are 1) Ubuntu server 24.04, 2) Asahi Linux (Fedora remix), 3) Arch Linux. And setting up on-premise Kubernetes on each of these different operating systems was quite a challenge. When configuring the home network, I even tore apart the junction box in the shoe rack, and looking back now, I think I was working with half-crazed focus.

I was able to present these processes at an internal development seminar at the company, and I hope I can introduce related materials and content in another post later.

Adapting to Neovim (Complete) #

For a long time, I wandered between two IDEs: Jetbrains IDE and Visual Studio Code. But now I’ve found an application that will completely end this dilemma. It’s Neovim.

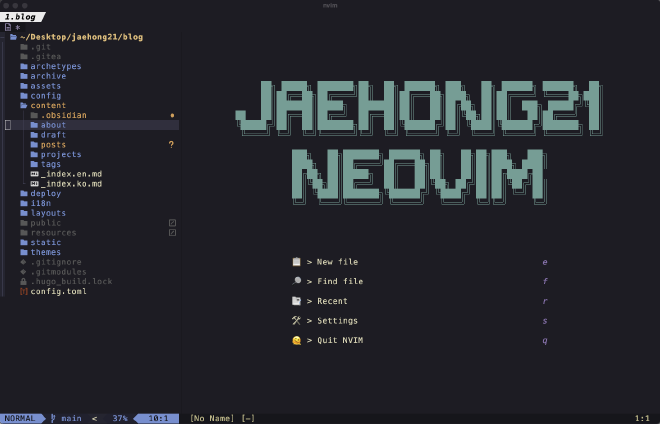

Isn’t it beautiful? Now I can develop immediately even in a simple terminal. Originally, I’ve been consistently using vim itself by installing extensions like VSCodeVIM or IntelliJ Vim in VSCode or Jetbrains. Neovim supports a much larger community and extensions than the original vim, allowing you to configure a practical development environment with vim.

Now it’s been about two months since I started using Neovim, and I’ve reached a stage where I have no difficulty in doing my work. Based on my experience so far, I can briefly summarize the pros and cons as follows:

Neovim Pros

- Memory usage is very low. It uses significantly less computer resources compared to the existing VSCode, especially Jetbrains products. It’s not much different from using a Terminal.

- By managing

~/.config/nvimwith Git, you can configure (reproduce) the same development environment in any environment. Personally, I think this is the biggest advantage. - Centered around Neovim plugin managers (e.g., lazy.nvim), it provides very high freedom in development environment setup by utilizing numerous open sources.

Neovim Cons

- Being able to customize everything means you have to set up everything yourself. You need to set up LSP, Keymaps, UI configuration, and everything else yourself.

- Since it’s essentially an upgrade of VIM, if you can’t handle VIM, the hurdle is very high.

- It’s a bit unrealistic to catch up with all the conveniences that IntelliJ IDEA provides for Java. However, there’s no problem in handling Golang, Javascript, Python, etc.

Regardless of these pros and cons, I’m very satisfied with my Neovim usage experience. And I express my gratitude to our company team member @Claud who introduced Neovim to me and taught me how to use it. The Neovim settings I’ve configured to date are uploaded on my Github. (https://github.com/jaehong21/neovim-config)

Hibiscus; My First TUI Development #

Github: https://github.com/jaehong21/hibiscus

The tool I use most often along with Neovim is lazygit. lazygit immediately shows a GUI in the terminal and allows you to execute frequently used git commands with shortcuts. This UI that shows in the Terminal and allows interaction without a mouse is often called TUI (Terminal UI).

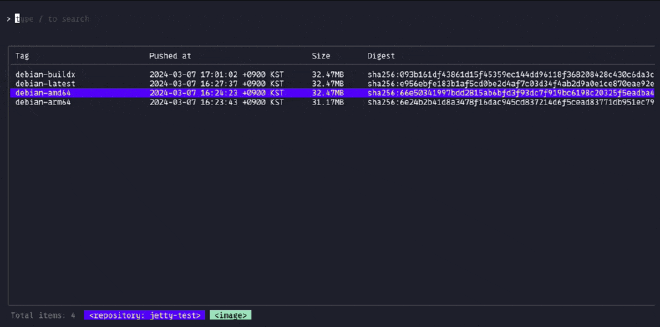

While using lazygit well, I thought it was very inconvenient to set up the development environment at work and look at the AWS web console (as always). It was a bit slow to respond, and especially troublesome to switch between multiple accounts. So, as I was doing a lot of docker build related work at the time, I decided to create a TUI to view/edit ECR myself.

I used Golang, our team’s main stack, to build it, and the experience of constructing UI with Golang was quite new. Although the project is currently in a Pending state due to other TODOs, I aim to steadily support other AWS services in the future.

+) The reason the project name is hibiscus is because I’ve been enjoying hibiscus tea at the in-house cafe these days.

Today’s Frontend Ecosystem is Too Fast #

Subtitle: My First Svelte4 Experience

I’ve now somewhat distanced myself from frontend. However, frontend still holds symbolic significance as the area I first entered when I started development, and I haven’t lost interest in it. It’s just that I now lack the time to study frontend.

Everyone knows that the JS ecosystem is changing rapidly day by day these days. Among them, I wanted to settle on my main frontend tech stack. React was the framework I used most often, and NextJS was the current trend, but NextJS felt a bit overwhelming for my personal purposes. So, I tried out the following frameworks for 2-3 weeks.

- HTMX

- NextJS 14

- Svelte4 + Svelte5 rc

- QwikJS

I made another side project with these frameworks, but it’s not complete yet and in demo state, so I’m a bit embarrassed to make it public. After using these frameworks, Svelte4 was the most satisfying. And I completed the side project with Svelte4 as well. However, with Svelte5, many breaking changes were announced, and personally, the direction Svelte5 was pursuing was quite different from the direction I found satisfying in Svelte4.

To conclude, I’ve currently settled back on React18, and when React19 is announced, I think I’ll have to try it again. The JS, especially the frontend ecosystem, is constantly changing these days in the blink of an eye, and I keep feeling overwhelmed trying to keep up with the trends.

Git Protocol Deep Dive #

I’m self-hosting my personal Git server on my home server in my room using the Gitea project, similar to GitLab. Before settling on Gitea, I tried several other self-hosting Git projects including GitLab.

And as I kept using Gitea, I thought, Couldn’t I just make my own Git from scratch? So I immediately started development and constructed the following service architecture. About 80% of what you see in today’s diagram is already completed, except for the Desk (client) part.

Some of you will surely be curious about git-http-backend in this architecture. I’ll cover this part separately in another blog post.

While it’s difficult to cover everything in this post, I started building my own Github/Gitea from scratch. In the process, I was able to learn in detail about different types of Git Protocols (SSH/HTTP/Local) specifications, and vaguely distinguish which features belong to git-core and which are provided by hosting providers like Github.

When this service is completed, it seems too wasteful to use it alone, so I’m trying to publish it in some way.

Outside of Programming? #

There’s really nothing. It seems like I’ve just been sitting in front of a computer throughout Q2.