Three months flew away; 2024 Q3 Retrospective

Table of Contents

The Past Three Months Flew By #

Really, three months passed by in the blink of an eye. Repeating my daily routine of work and development at home, there were no major events, but when I look back, it was quite a busy 90 days.

In June of this year, I wrote my first retrospective, and I was afraid it might be my last. But now, seeing myself writing this post again, I want to give myself a little credit for taking that second step. In my previous retrospective, I differentiated between me working at the company and me developing as a hobby at home, but this time, I’m just going to list out what I’ve done, without any specific sections.

It’s likely to be a long read, but I’m confident the content will be interesting. If you happen to come across this post, I hope you stick with it until the end 😁.

The table of contents is as follows:

- Custom Domain Support

- Mermaid

- Terragrunt

- OpenSearch and CoreDNS

- Java, AmazonLinux, Karpenter Version Updates

- Github Actions Runner Stopped!!

- Rebuilding Zsh

- Kafka Session 101, 201

- Now I Love Cloudflare Workers

- (WIP) What I’m Currently Working On

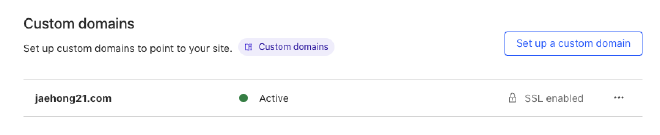

1) Custom Domain Support #

You might have seen services like Vercel or Github where you can use your own custom domain instead of .vercel.app or .github.io, by adding a CNAME or TXT record or similar setup. In July, I spent a lot of time implementing and refactoring this feature. Initially, I felt a lot of excitement as I was working on a feature that I had previously only used blindly with managed services. Solving this issue cloud-natively using Kubernetes was also a pleasure.

In simple terms, 1) I used the HTTP-01 Challenge to automatically issue certificates, and 2) the main point was dynamically routing traffic to the appropriate domain and returning the relevant webpage. Beyond just making it work, I focused on details such as how to maintain and manage the system and handle a wide range of edge cases (like managing a large number of certificates, dealing with incorrect CNAME records, and figuring out how to handle high traffic or numerous routes).

One of the most enjoyable aspects was examining Cert Manager and Let’s Encrypt for certificate issuance and using an L4 NLB instead of the AWS ALB I usually rely on for TLS Passthrough.

I could share more about the architecture with diagrams, but for now, I’ll save that for another post, where I can delve into it with more detail. (Time rarely allows for this… there’s a backlog of blog posts to write)

2) Mermaid #

Starting around July, I began to use Mermaid heavily. In my previous retrospective and the recent post

about Redis streams, I introduced a lot of content using Mermaid. I used to rely a lot on Excalidraw, even self-hosted it (https://excalidraw.jaehong21.com). However, one of the issues I faced was how to store the project. With Excalidraw, I had to export the project as a .excalidraw file, or saving it as .png made it uneditable.

In contrast, Mermaid allows you to save it as a simple text file, like code. Additionally, Markdown, Notion, and Github all support Mermaid previews natively. Before learning Mermaid, I didn’t realize it supports not only basic flowcharts but also class diagrams, ERD, packet diagrams, mind maps, and much more.

Of course, one downside is that it’s difficult to position components flexibly. The fact that graphs are drawn declaratively is both its biggest strength and weakness. Even so, due to its excellent compatibility and versatility, it’s definitely worth learning. (I picked it up after seeing one of my teammates @Claud using it.)

3) Terragrunt #

Like many other companies, the one I work for, Channel Talk, manages its infrastructure using Terraform. However, as the number of regions to manage has increased and more components and modules have been added, it became obvious that covering most of the infrastructure with a single, large Terraform state was becoming unmanageable (in fact, it had been difficult for a while).

So, from July until now, we’ve been working on a large-scale refactor of our Terraform project (Git repo). Along with that, I’ve been researching Terragrunt, a tool designed with the philosophy of keeping your Terraform code DRY. It’s especially suited for use cases where similar infrastructure needs to be replicated across multiple regions or accounts.

Managing infrastructure with a tool like Terraform becomes more challenging once an architecture is set, as changes are difficult and must be done with care. One key takeaway from researching Terraform v2 is that more thought needs to be given to policies and maintenance rather than technical difficulties. This is something I’ve been learning continuously since joining the DevOps team, not just while working with Terragrunt.

On a side note, we also need to implement CI/CD pipelines for Terraform… We still need to decide on policies like Apply after Merge vs. Apply before Merge.

4) OpenSearch and CoreDNS #

It’s a bit delicate to talk about, but there were many eventful days in Q3, as seen on the Channel Talk Status page. Although the incidents listed there weren’t directly related to my work, there were recurring issues with OpenSearch and CoreDNS this year (which are now mostly resolved).

Even though these issues caused some headaches, I learned a lot. Both OpenSearch and CoreDNS had infrastructure-level problems rather than application-layer bugs. Particularly, CoreDNS is an essential component within the Kubernetes ecosystem.

OpenSearch was quite unfamiliar to me, as I hadn’t worked much with ElasticSearch or the ELK stack. However, this was a great opportunity to strengthen my understanding of OpenSearch, and it gave me a chance to study CoreDNS, which I had previously taken for granted. I finally finished reading my long-overdue O’Reilly CoreDNS book!

Working in a cloud-native environment using Kubernetes and various AWS infrastructure, I was able to experience and observe firsthand how errors propagate when issues arise and how to trace the source of those errors. It became clear that alongside building performant and functional architecture, visibility into the system and a quick response during incidents are equally important.

5) Java, AmazonLinux, Karpenter Version Updates #

The title alone makes me feel out of breath. Each of these on its own would be a significant event, but surprisingly, all three underwent changes during August. The Java and AmazonLinux updates didn’t take much time in terms of actual implementation. However, since these were updates that needed to be applied to the main API servers, we had to proceed with great caution, which took some time. While the Java update was just a patch, the Linux update involved upgrading from AL2 to AL2023. Special thanks to @Pepper for diligently monitoring JVM metrics through Datadog and Grafana during this process. The challenge with these updates was how to swap out the main API servers seamlessly, without downtime. There are plenty of methods for achieving zero-downtime replacements, but our focus was on finding a simple solution that would allow for quick rollbacks if any issues arose.

The Karpenter update also required a lot of effort. When I first joined Channel Talk, Karpenter was already quite stable, using the NodePool CRD. However, the EKS cluster in a different region, which I (got assigned to through a lottery) was managing, was still using the old Provisioner CRD and awaiting updates. Even though I wouldn’t be using this outdated CRD going forward, I still needed to study it thoroughly to ensure a smooth update. Since Karpenter directly affects the EKS nodes, we had to be extra cautious. There were some hiccups along the way, but thanks to @Lento, who helped me through the late-night troubleshooting, we managed to pull through.

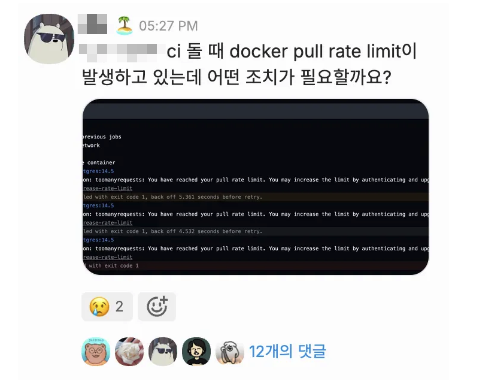

6) Github Actions Runners Stopped #

As mentioned in my previous retrospective, my primary responsibility since joining Channel Talk has been migrating from CircleCI to Github Actions (GHA). However, one unique aspect is that we set up Github Actions using self-hosted runners within Kubernetes.

Generally, companies switch from managed to self-hosted runners for reasons related to 1) security and 2) cost. Additionally, there’s the issue of availability. For instance, relying solely on CircleCI would mean that a CircleCI outage could paralyze the entire CI pipeline. And in fact, CircleCI does tend to experience more issues than one might expect.

At first, there weren’t many CI pipelines using GHA, so it wasn’t a major concern. But by September, as most projects had migrated to GHA, we had to start paying attention to the availability of the GHA runners themselves. Two unexpected issues cropped up: 1) Github Token Expiry and 2) Docker Hub Rate Limits.

Both issues might seem simple but are critical enough to halt the entire CI pipeline. It was a bit frustrating to realize that I had missed these details, and it reminded me once again of the responsibility and pressure that comes with potentially inconveniencing developers within the company.

The Docker Hub rate limit issue has now been resolved, but there’s a lot I could say about it. I’ll cover it in detail in another post (one of many in my backlog of posts to write). Here’s a sneak peek of what that post will cover:

- Docker pull-through cache (with ECR and Harbor)

- Mirror registry

- Using Istio to reroute Docker Hub → ECR traffic…

7) Rebuilding Zsh #

While using oh-my-zsh, a strange competition started among my team. It was a shell startup time competition.

# Start timer

zmodload zsh/datetime

zsh_start_time=$EPOCHREALTIME

# End timer and calculate duration

zsh_end_time=$EPOCHREALTIME

zsh_load_time=$(echo "($zsh_end_time - $zsh_start_time) * 1000" | bc)

echo "zsh startup time: ${zsh_load_time}ms"

By placing this code at the top and bottom of the .zshrc file, we could measure how fast the shell started. My Zsh startup time was usually around 400 ~ 500ms, which I didn’t find particularly bothersome. However, as someone who uses Neovim and numerous terminal windows, I got competitive and decided to optimize it.

Upon hearing from team member @Tanto that oh-my-zsh was super slow, I decided to switch everything over to a different plugin manager, Antidote, along with Powerlevel10k. I also replaced NVM (Node Version Manager), which was consuming most of a time, with a Rust-based alternative called mise. After completing all the setup, I managed to reduce the shell startup time to around 80ms.

However, the inconvenience of losing some of the autocompletions and features like autojump came next. Taking advice from @Claud, I decided to give the Fish shell a try, as it comes with many built-in plugins.

The result? Satisfaction. I’ve now switched from Zsh to Fish shell and, with a few tools combined, have kept the startup time under 100ms.

8) Kafka session 101, 201 #

In September, Confluent held Kafka Sessions 101 and 201. In Kafka 101, they covered the basics, while Kafka 201 delved into Producer ACKs, Consumer Rebalancing, and Replica Recovery. As I hadn’t much time to study Kafka before, this session was literally informative.

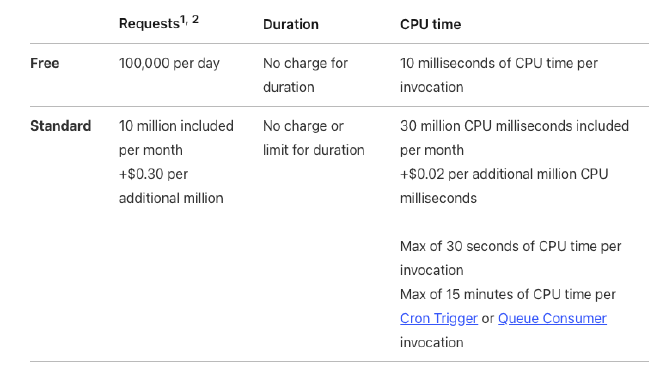

9) Now I Love Cloudflare Workers #

When working on side projects these days, I mainly use Cloudflare Workers (Cloudflare’s version of AWS Lambda). Even though I have several home servers with over-the-top specs, I still think managed services (especially serverless ones, assuming they are free) are unbeatable in terms of stability. Below is the Cloudflare Workers Pricing.

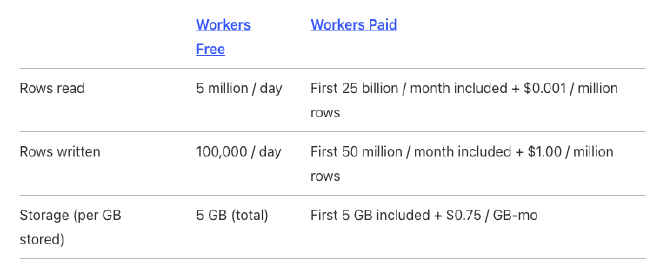

It’s pricing is just out of the box 🫠. The Standard Plan, which costs only $5 per month, is astonishing. I’ve also been using Cloudflare D1 recently, which is a serverless database powered by a tuned version of SQLite by Cloudflare. This service is also free, and the paid plan shares the same usage with the Standard Plan mentioned above.

Here’s the D1 Pricing.

For side projects or simple products, you can get almost everything done for free using just these two services. Additionally, Durable Objects allows you to build WebSocket chat servers in a serverless environment, but I won’t cover that here. Just like with Kafka 101, I’ll be back with a “Cloudflare Free-Tier 101” someday. Recently, I’ve been having fun experimenting with Cloudflare’s features, enjoying the cost-efficiency while building side projects.

10) (WIP) What I’m Currently Working On #

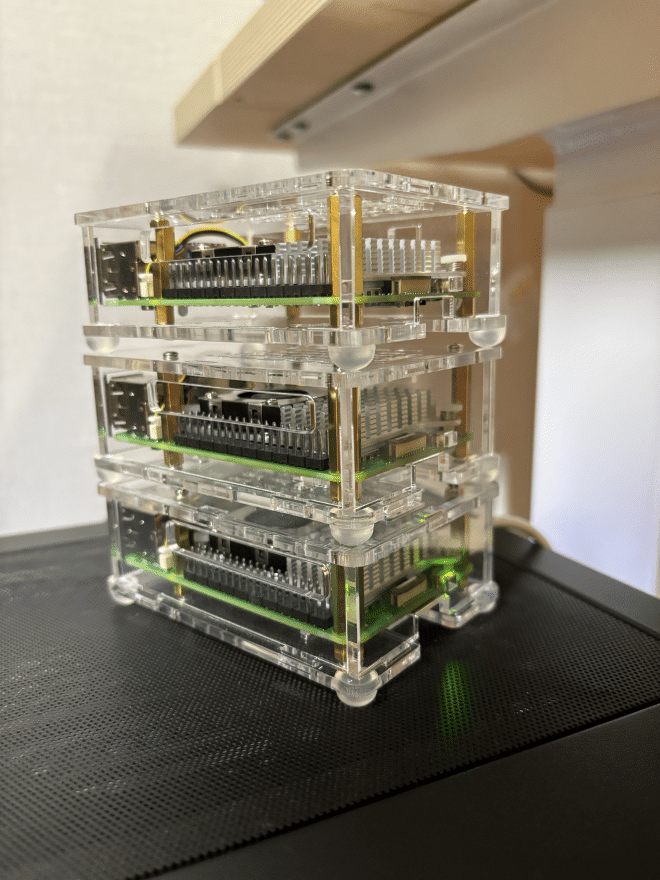

Recently, I bought two more Raspberry Pi 5s. With my NAS included, I now manage a total of 7 nodes. It was easy to manage the first two or three, but now I’m having a hard time to decide new server names whenever I add one.

My personal goal for October is to implement a multi-cluster setup using Istio. Recently, I replaced all my home server clusters’ ingress-nginx with Istio. The next step is to build a multi-cluster environment using Istio and also upgrade Prometheus, like at work, by incorporating Thanos to improve high availability and stability.

The goal is to not only create a multi-cluster environment at home but also utilize using cloud, school, and other locations to establish real DR(disaster recovery) and HA(high availability) setups.