Python GIL (Global Interpreter Lock)

Table of Contents

Introduction #

Row rate of speed of python compare to other programming language is always being an issue, and this cannot be independent from GIL. Global Interpreter Lock is one of the most important keyword when trying to use multi-thread in python.

sysctl hw.physicalcpu hw.logicalcpu

Test environment where code below is executed.

hw.physicalcpu: 8

hw.logicalcpu: 8

Test code #

import random

import threading

import time

# Finding max number in random generated array

def working():

max([random.random() for i in range(500000000)])

# 1 Single thread

s_time = time.time()

working()

working()

e_time = time.time()

print(f'{e_time - s_time:.5f}')

# 2 Double threads

s_time = time.time()

threads = []

for i in range(2):

threads.append(threading.Thread(target=working))

threads[-1].start()

for t in threads:

t.join()

e_time = time.time()

print(f'{e_time - s_time:.5f}')

Result:

Single thread: 70.46266

Double threads: 103.42579

Simply, we can expect using multiple threads will be faster than single thread execution. But, ironically the performance of double threads is more poor than single thread in python. That is because of GIL.

GIL #

Global Interpreter Lock is a lock that allows Python interpreters to execute only one thread of byte code. Allowing all resources to one thread, then locking it, preventing the other from running. It also similar with Mutex locks in concurrency programming.

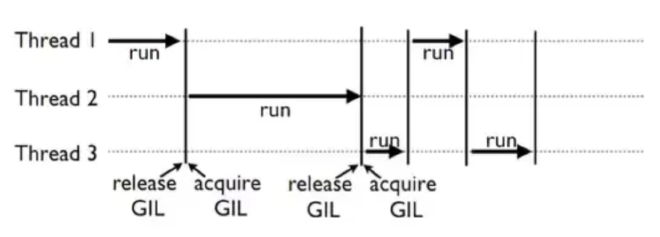

Let’s pretend working with three threads. In general, we expect each thread will work in parallel, but not because of this GIL. Below is an example of three threads operating on python.

Each thread gets a GIL and works, and all other threads stop working. In addition, there also context switching in multi-threads which is time-consuming compare to single thread operation.

Reason why using GIL #

Then, why python is using this GIL that makes python too slow? It’s because it makes reference counting much more efficient. Python manages its memory using Garbage collection and Reference counting.

In other words, python counts all how much objects & variables are being referenced. In this situation, when multiple threads try to use single variable, locks for every single variables will be essential for managing reference counts. To prevent this, python is acquiring and releasing locks globally.

Multi thread in Python is not always slow #

import random

import threading

import time

def working():

time.sleep(0.1)

max([random.random() for i in range(10000000)])

time.sleep(0.1)

max([random.random() for i in range(10000000)])

time.sleep(0.1)

max([random.random() for i in range(10000000)])

time.sleep(0.1)

max([random.random() for i in range(10000000)])

time.sleep(0.1)

max([random.random() for i in range(10000000)])

time.sleep(0.1)

# 1 Thread

s_time = time.time()

working()

working()

e_time = time.time()

print(f'{e_time - s_time:.5f}')

# 2 Threads

s_time = time.time()

threads = []

for i in range(2):

threads.append(threading.Thread(target=working))

threads[-1].start()

for t in threads:

t.join()

e_time = time.time()

print(f'{e_time - s_time:.5f}')

Result:

Single thread: 6.93310

Double threads: 6.05917

This time, Double thread operation is actually faster than single thread operation. The reason is beacus of sleep() function. While sleep in single thread must wait, nothing can be done. On the other hand, context switch can be happend while sleep in multi-thread operation.

In real world example, rather than sleep when there are operations that threads must wait (ex. I/O operation), multi-thread can have better performance even with GIL in python.